The source code for this article can be found here.

Welcome to another cloud experiment! The idea behind these hands-on tutorials is to provide practical experience building cloud-native solutions of different sizes using AWS services and CDK. We’ll focus on developing expertise in Infrastructure as Code, AWS services, and cloud architecture while understanding both the “how” and “why” behind our choices.

A Small Serverless Notification Solution

This time, you’re not trying to solve some problem at work—you just want to get a new console. The Nintendo Switch 2 released a few weeks back, and as expected by almost everyone, the demand for the console is far larger than the amount of stock available.

The situation right now is not that terrible, and there seems to be a bit more stock available on several online retailers, but you would really like to be able to get it as soon as possible from one of the shops in your area. Because of that, you decided to write a simple notification workflow that will let you know as soon as it becomes available.

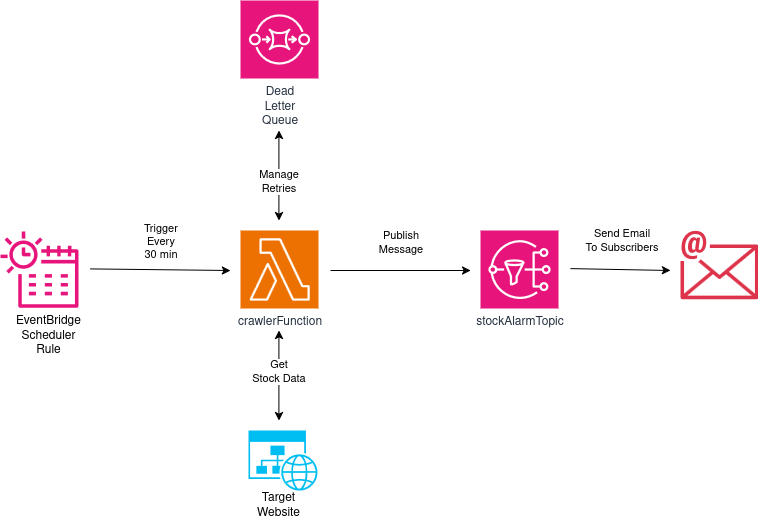

The design looks like this:

- An EventBridge Scheduler rule will trigger a Lambda function every 30 minutes. Twice per hour is a reasonable frequency—nowadays being notified on the same day the console is available is more than enough.

- Things could go wrong during the execution of our function, so we will configure a dead letter queue (DLQ) to keep failed events for a bit, and instruct our Lambda function to do a few retries before giving up.

- Our Lambda function will retrieve HTML from the online store’s product page, and attempt to figure out whether the product is available or not based on that.

- If the product is available, the Lambda function will publish a message on an SNS Topic. We will create an email subscription (with our own personal email) for this SNS topic, so we will be notified when messages get published there.

We know what to do, so let’s start building!

Creating Our Project

Same old standard procedure: First, we just need to create an empty folder (I named mine InventoryStockAlarm) and run cdk init app --language typescript inside it.

This next change is totally optional, but the first thing I do after creating a new CDK project is to head into the bin folder and rename the app file to main.ts. Then I open the cdk.json file and edit the app config like this:

{

"app": "npx ts-node --prefer-ts-exts bin/main.ts",

"watch": {

...

}

}

Now your project will recognize main.ts as the main application file. You don’t have to do this—I just like having a file named main serving as the main app file.

Stack Imports and Creating the Scheduler Rule

From looking at the diagram, we know we’ll need the following imports at the top of the stack:

import * as cdk from "aws-cdk-lib";

import { Construct } from "constructs";

import { aws_events as events } from "aws-cdk-lib";

import { aws_events_targets as event_targets } from "aws-cdk-lib";

import { aws_lambda as lambda } from "aws-cdk-lib";

import { aws_sns as sns } from "aws-cdk-lib";

import { aws_sqs as sqs } from "aws-cdk-lib";

import { aws_sns_subscriptions as sns_subs } from "aws-cdk-lib";

Creating a schedule rule using CDK is easy—the only property we will need is a schedule to make sure it emits an event every half hour, like this:

const crawlingSchedule = new events.Rule(this, "crawlingSchedule", {

schedule: events.Schedule.rate(cdk.Duration.minutes(30)),

});

Creating the SNS Topic and a DLQ

We will need an SNS topic for receiving messages from the Lambda function, and forwarding them to an email. We will also need an SQS queue that our Lambda function will use when a run fails and it needs to perform retries.

We will create our SNS Topic as:

const stockAlarmTopic = new sns.Topic(this, "stockAlarmTopic", {

topicName: "stockAlarmTopic",

displayName:

"SNS Topic to notify me by email if the product is available",

});

The topicName overrides the default behavior of assigning an auto-generated name to the topic, which makes finding it easier. The displayName will appear on the emails we receive from this topic, as we will see later when we test our solution.

And then we create our SQS queue:

const dlq = new sqs.Queue(this, "deadLetterQueue");

Creating this type of resource is usually a very simple endeavor—most of the fun comes from integrating them with other services, like we will do next.

Creating Our Crawler Lambda Function

We need a Lambda function that:

- Retrieves data from a given URL

- Performs some type of analysis on the HTML it receives and figures out whether the product is available

- If the product is in stock, it should publish a message on an SNS topic

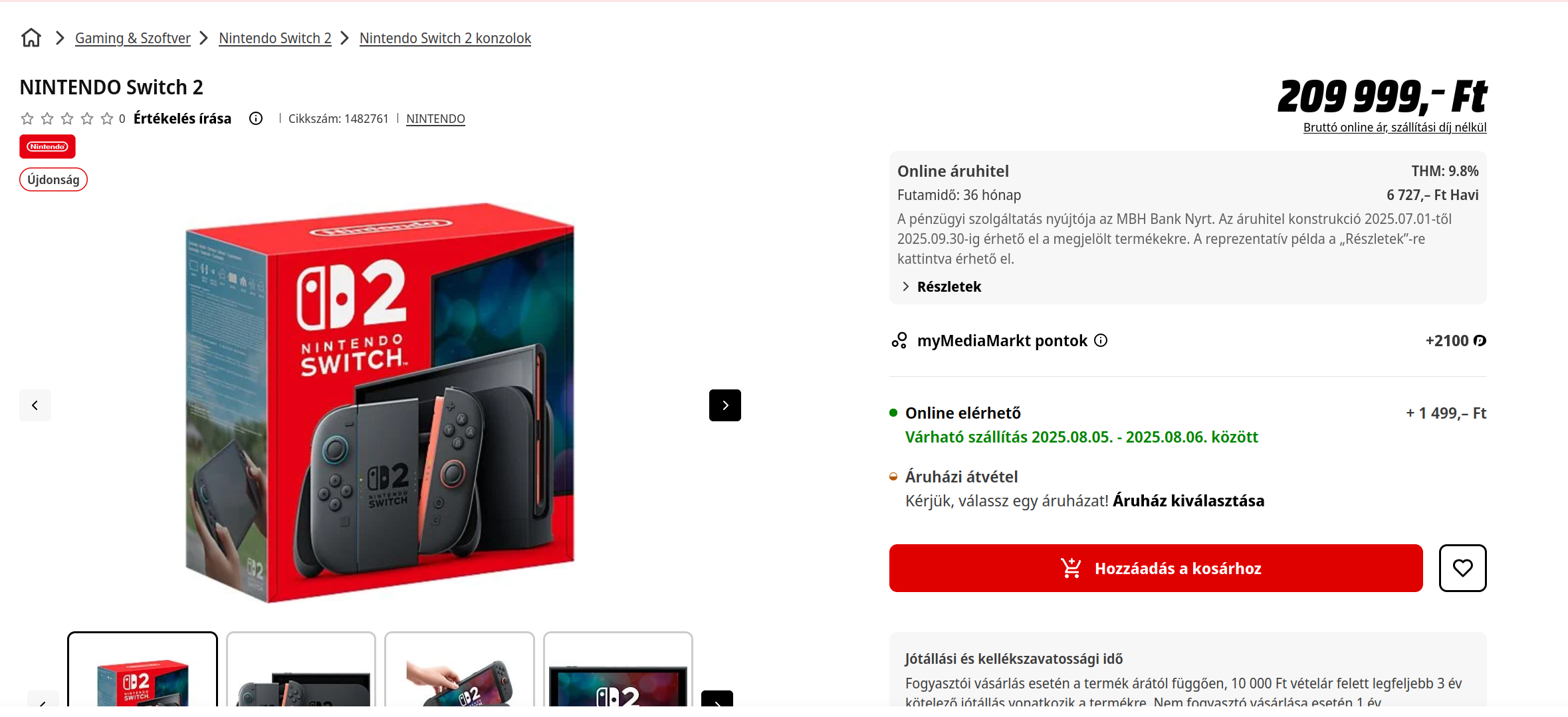

I am interested in the availability of the console in a local store, so let’s check what that it looks like for both available and unavailable products. I found out that they currently have stock for the standard Switch 2 console, and it looks like this:

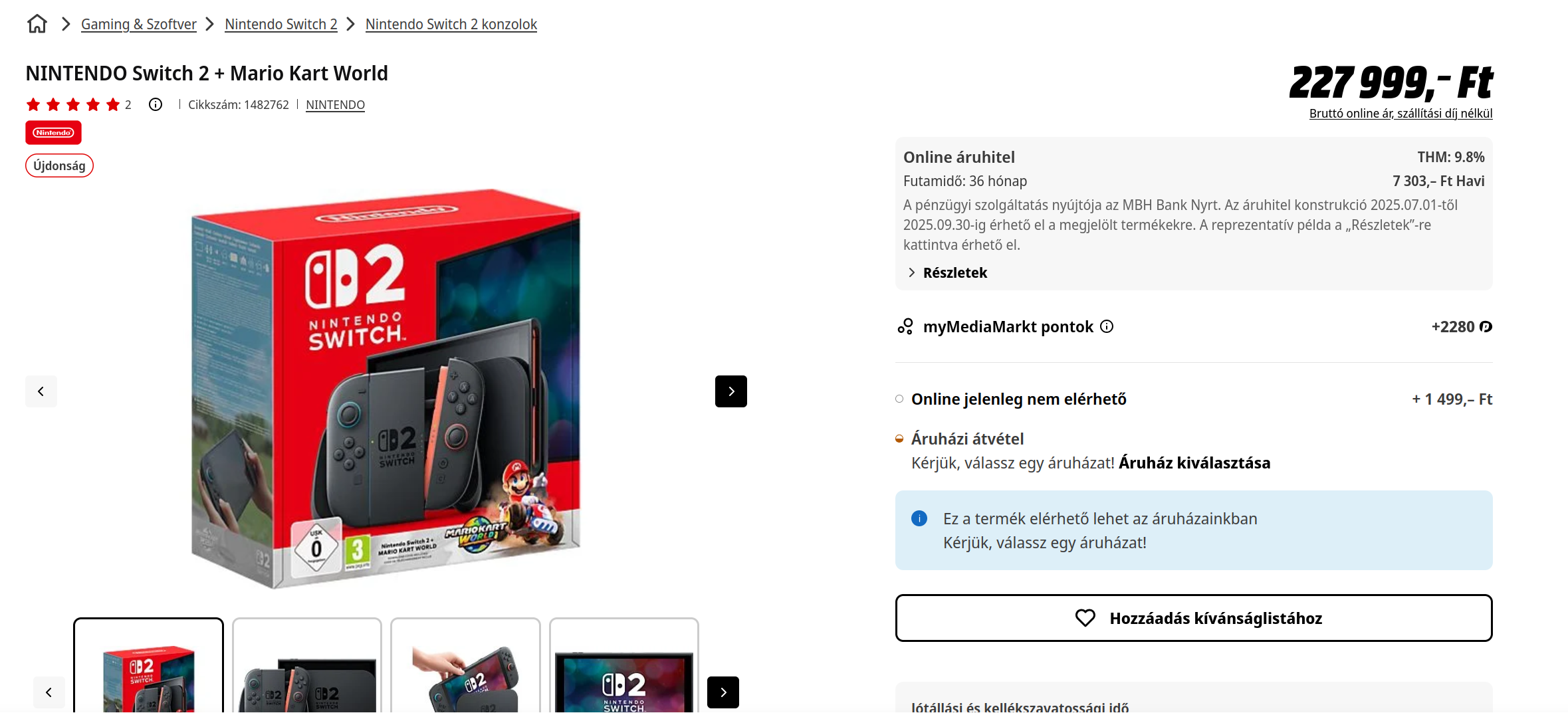

For the bundle that comes with Mario Kart, we get this:

You can see that the main difference is the section that says Online elérhető (Available Online, in Hungarian), so we should probably check for that string on the HTML we get will get back on our request.

Create a folder called lambdas at the project’s root level (alongside bin and lib), and within create a file called crawler.py:

import os

import requests

import boto3

from bs4 import BeautifulSoup

client = boto3.client('sns')

def handler(event, context):

product_url = os.environ.get('PRODUCT_URL')

availability_string = os.environ.get('AVAILABILITY_STRING')

sns_arn = os.environ.get('SNS_ARN')

headers = {

'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:141.0) Gecko/20100101 Firefox/141.0'

}

response = requests.get(product_url, headers=headers)

findings = BeautifulSoup(response.text, features="html.parser").findAll(string=availability_string)

if findings:

subject = "Product availability notice"

message = "The product is available!"

response = client.publish(

Message=message,

Subject=subject,

TargetArn=sns_arn,

)

Let’s go section by section to understand what it does:

- We import only what we need: The

osmodule for retrieving environment variables, therequestsmodule for performing an HTTP request targeting an online store, theboto3module (the Python AWS SDK) for publishing a message on the SNS topic, andBeautifulSoupfor parsing HTML and searching within it. - We create an SNS client outside of the function’s handler—we don’t want to create clients on every Lambda invocation, and instantiating them outside of the handler lets us share any resource or connection across invocations while the execution environment remains hot.

- From environment variables we pick the product’s URL, the string we will scan for when trying to establish whether the product is available (Online elérhető), and the ARN of the SNS topic we will send messages to if we find available stock.

- We perform a GET request using the product URL provided, and we pass a header with the user agent. This is important because most websites instantly block requests that lack a browser user agent to prevent crawlers from retrieving data (ahem). We then use BeautifulSoup to scan the HTML and figure out whether it matches our availability string.

- If there is a finding (a match!) we perform a call to the client’s

publishfunction, with a subject and message that will be part of the email sent to the user.

I have to note that this is really, really poor code. Web crawling is not my forte and the function quality is not the point of this lab (architecture and overall design are), but it’s important to note that this is probably not the way you want to go about when building code for crawling the web. The design is brittle; small changes on the website, or using it on another site will definitely break the code, and we are not handling any of the many exceptions that can be raised during execution. Worth pointing out, but let’s not get stuck on it too much—moving on.

In our stack we can create the Lambda function. Remember to add bundling options to install dependencies and pass the environment variables needed for the function to operate properly.

const crawlerFunction = new lambda.Function(this, "crawlerFunction", {

runtime: lambda.Runtime.PYTHON_3_13,

code: lambda.Code.fromAsset("lambdas/crawler", {

bundling: {

image: lambda.Runtime.PYTHON_3_13.bundlingImage,

command: [

"bash",

"-c",

"pip install --no-cache requests beautifulsoup4 -t /asset-output && cp -au . /asset-output",

],

},

}),

handler: "crawler.handler",

timeout: cdk.Duration.seconds(30),

environment: {

PRODUCT_URL: props.productUrl,

AVAILABILITY_STRING: props.availabilityString,

SNS_ARN: stockAlarmTopic.topicArn,

},

description:

"Function for crawling a website in search of available stock",

});

crawlingSchedule.addTarget(

new event_targets.LambdaFunction(crawlerFunction, {

deadLetterQueue: dlq,

maxEventAge: cdk.Duration.minutes(20), // Set the maxEventAge retry to 20 minutes

retryAttempts: 2,

})

);

Notice that in the last section of this code block we create a Lambda target for our schedule. With this in place, we will be calling our Lambda function every 30 minutes, and also configure the dead letter queue, set the maximum event age retry to 20 minutes, and limit the number of retry attempts to 2.

Creating an Email Subscription for Our SNS Topic

We need to create a subscription that lets the topic send us email. This is just a matter of calling addSubscription on our topic and adding whichever email we want to receive messages from, like this:

stockAlarmTopic.addSubscription(

new sns_subs.EmailSubscription(props.targetEmail)

);

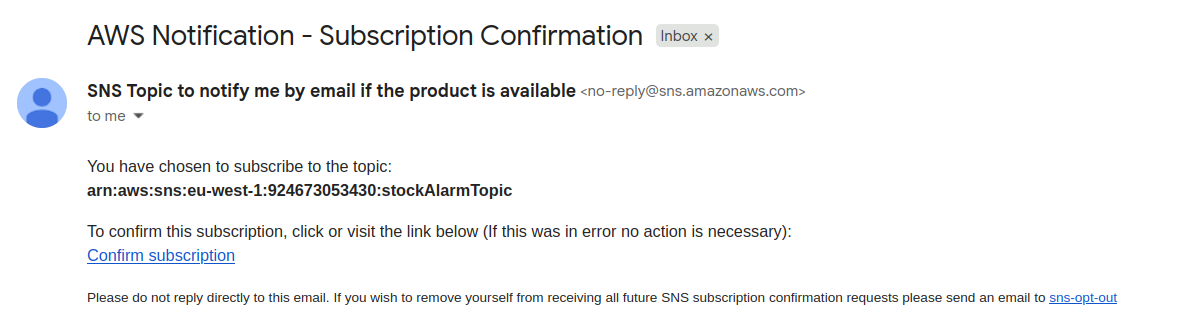

When the stack gets deployed for the first time, we will receive a confirmation email that looks like this:

AWS makes sure that SNS subscriptions go through a two-step process to ensure you are not maliciously subscribing unsuspecting email addresses to a topic that will later be used to send spam. You just need to click on Confirm Subscription, so AWS will know this is a legit subscription.

Custom Stack Props

So far, we have been referencing a few stack props that are not defined anywhere (props.targetEmail, props.productUrl, and props.availabilityString), so let’s fix that.

At the top of your stack, before the stack’s class definition, add the following interface:

interface InventoryStockAlarmStackProps extends cdk.StackProps {

targetEmail: string;

productUrl: string;

availabilityString: string;

}

Then, in the constructor’s definition change the props type from cdk.StackProps to the one we just created, like this:

constructor(

scope: Construct,

id: string,

props: InventoryStockAlarmStackProps

)

Adding Permissions and Creating the Stack

My favorite part of working with CDK is probably how easy it is to grant permissions to entities within AWS. We need:

- crawlerFunction must be able to publish messages that target stockAlarmTopic

In CDK, these permissions are defined as:

stockAlarmTopic.grantPublish(crawlerFunction);

And we are done. Now we can go into main.ts and create our stack:

new InventoryStockAlarmStack(app, 'InventoryStockAlarmStack', {

targetEmail: 'jl.orozco.villa@gmail.com',

productUrl: 'https://www.mediamarkt.hu/hu/product/_nintendo-switch-2-mario-kart-world-1482762.html',

availabilityString: 'Online elérhető',

});

I decided to use the URL for the Mario Kart bundle—it’s currently unavailable and I’d like to be notified as soon as it’s available for online purchase.

Testing the Stack

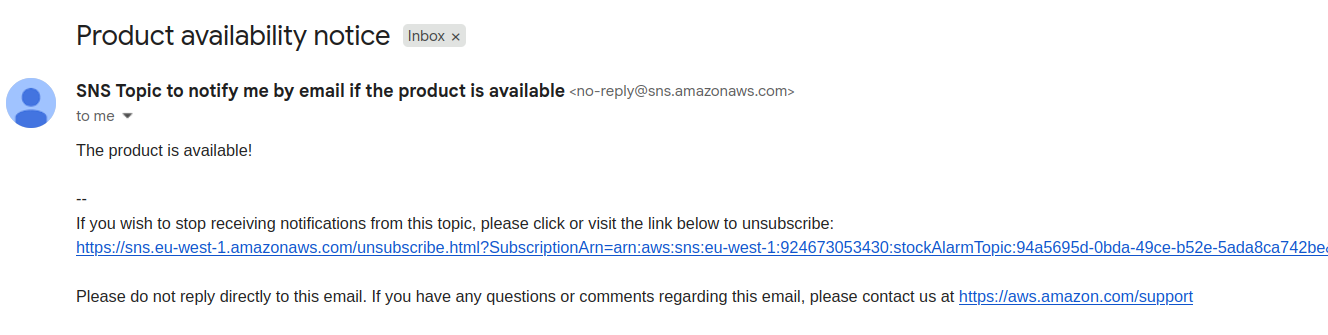

That’s pretty much it! Now you just need to wait until stock becomes available and you will receive an email that looks like this:

Then, you can go and place an order for whatever product you were waiting for.

IMPORTANT! Always remember to delete your stack, either by running cdk destroy or by deleting it manually on the console.

A Few Notes on Web Crawling

Web crawling is a legal practice, but depending on the laws and regulations that apply to the place where you live (copyright, terms-of-service, data protection) what you decide to do with that data may be illegal and get you in trouble, so always exercise caution.

There are ethical ways of scraping the web, so as much as possible try to respect the guidelines of a website’s robots.txt, and learn how to do it in the most compliant way possible. This is not only so that you don’t get in trouble, but it’s also the right thing to do.

Another important thing to keep in mind is that where you host your crawlers has a big impact on the results. Most stores have in place a few protections to ensure their site doesn’t get crawled by unwanted spiders—they often blacklist most cloud provider IP ranges to prevent them from sending requests to their sites, so chances are that your AWS Lambdas will not even be able to access the store without making requests through a proxy. The store that I used for this exercise was partially selected for this reason, as it’s an example of such a case—the requests my Lambda sent were actually blocked (it returned a 403 code), and I had to proxy the request to get the notification I showed at the end.

The point is, web scraping is its own thing—it’s a big field with lots of nuances and specialized knowledge, and many people make a living as expert spider builders (cool title now that I think about it, Juan L. Orozco V. CSO / Chief Spider Officer). If you are interested in the topic, there are some great books out there, like the one with the Pangolin on the cover, that can give you the knowledge you need.

Improvements and Experiments

- Instead of receiving an email notification, modify the code to instead send you an SMS message.

- Learn some web scraping, and try to improve the function by making it more robust.

- Currently, this stack can only monitor one product page at a time, so I propose the following mod: Instead of passing the product URL and availability string as environment variables, retrieve those from events. Then, create scheduled events for querying different websites. This also requires you to modify the notification message to list the product that the notification is about.

- Right now, if a product becomes available it will just keep telling you about it every 30—this can become a bit annoying after a while. How would you go about preventing this? How can you implement a quiet period after stock is found for the first time?

This time we got the chance to play with SNS topics and EventBridge for the first time. The more services and constructs you learn and master, the better you’ll become at designing and building cloud-native solutions-and this project just added two new valuable tools to your arsenal.

I hope you find this useful!