As you might remember, supervised learning makes use of a training set to teach a model how to perform a task or predict a value (or values). It's also important to remember that this training data needs to be labeled with the expected result/right answer for every individual example in the set.

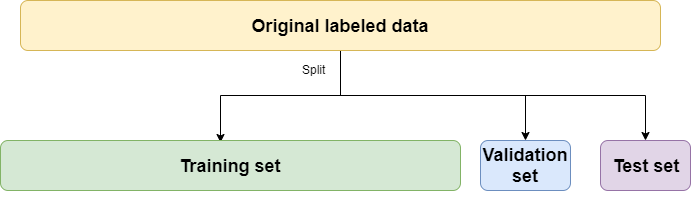

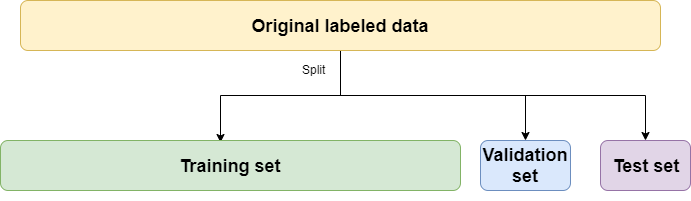

In projects that use supervised learning, some effort is required in the beginning to build a dataset with labeled examples. In practice, you will need to extract 3 subsets from this original labeled data: the training, validation and test sets. This is an important step for evaluating the performance of different models and the effect of hyperparameter tuning.

Despite being a fundamental topic, many early practitioners in data science find it a bit confusing. Understanding the usage and differences between the validation and test sets is important and doesn't require much effort.

So let's take a look at both how both sets are used to understand why we need them.

Validation and test sets, where do they come from?

Both sets are derived from your original labeled data. That data usually needs to be compiled from different sources across an organization and transformed into a structured format. This is a huge topic and the goal of the article is not to explore data mining techniques, so we will just assume that we have a dataset made of labeled examples.

Once you have the training data, you need to split it into three sets:

- Traning set: The data you will use to train your model. This will be fed into an algorithm that generates a model. Said model maps inputs to outputs.

- Validation set: This is smaller than the training set, and is used to evaluate the performance of models with different hyperparameter values. It's also used to detect overfitting during the training stages.

- Test set: This set is used to get an idea of the final performance of a model after hyperparameter tuning. It's also useful to get an idea of how different models (SVMs, Neural Networks, Random forests...) perform against each other.

Now, some important considerations:

- The validation and test sets are usually much smaller than the training set. Depending on the amount of data you have, you usually set aside 80%-90% for training and the rest is split equally for validation and testing. Many things can influence the exact proportion of the split, but in general, the biggest part of the data is used for training.

- The validation and test sets are put aside at the beginning of the project and are not used for training. This might seem obvious, but it's important to remember that they are there to evaluate the performance of the model. Evaluating a model on the data used to train it will make you believe it's performing better than it would in reality.

- All 3 sets need to be representative. This means that all the sets need to contain diverse examples that represent the problem space. For example, in a multiclass classification problem, you want to ensure that all 3 sets contain enough examples of each class. Otherwise, you run the risk of training a model with just a non-representative subset of the data or performing poor validation and testing.

Good, it's time to see where each set is used.

The validation set and hyperparameter tuning

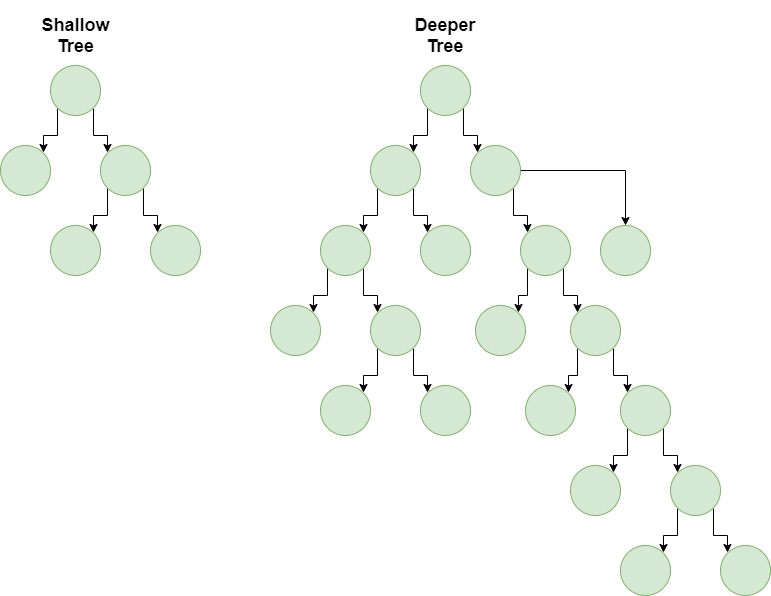

Different machine learning algorithms for training models have special values you can tweak before the learning process begins. These values are called hyperparameters and are used to configure the training process that will produce your models.

Changing the value of hyperparameters alters the produced model, and are usually used to configure how the algorithm learns relationships in data. A typical usage for hyperparameters is setting the complexity of the model: you can create a more complex model that can learn complicated relationships or a simpler model that learns simpler relationships using the exact same algorithm.

A basic example of a hyperparameter is the depth of a decision tree. A shallow tree (just a couple of levels) is a much simpler model and can only learn very basic relationships between inputs and outputs. Training is relatively simple and fast, and for a lot of applications, it's just enough.

A deeper tree can learn much more complex relationships and tackle harder problems, but training it takes longer and you are at risk of overfitting.

So, which hyperparameters produce the model that best performs on your data? This is hard or impossible to calculate using theoretical tools, so we opt for a much more hands-on approach: test different algorithm and hyperparameter configurations. For evaluating the performance we need data the model hasn't seen yet, so we use the validation set.

You will run your models on the validation set and see how different hyperparameter configurations perform. Based on the results, you will tweak them until the model performs well enough for the specific problem you are trying to solve.

It is also by comparing the results with the validation sets that you can notice overfitting, a topic we will explore in a future article.

After you are done tweaking hyperparameters and have a few promising models, it's time to run some final tests.

Evaluating performance on the test set

Your final tests need data your models haven't seen yet.

Ok, but we already have a validation set, why do we need another set?

This is important because some information from the validation set might leak into the models. This means that by tuning hyperparameters, you might be teaching some of the idiosyncrasies of the validation set to your models (things that are not part of the general data). You might end up with models that are over tweaked to perform great on the validation set but don't perform that well on real data.

That's why you need a test set: a collection of examples that don't form part of the training process and don't participate in model tuning. This set doesn't leak any information into your models, so you can safely use it to get an idea of how well the model will perform in production.

A test set lets you compare different models in an unbiased (or less biassed) way at the end of the training process. In other words, you can use the test set as a final confirmation of the predictive power of your model.

What happens if I don't have enough data?

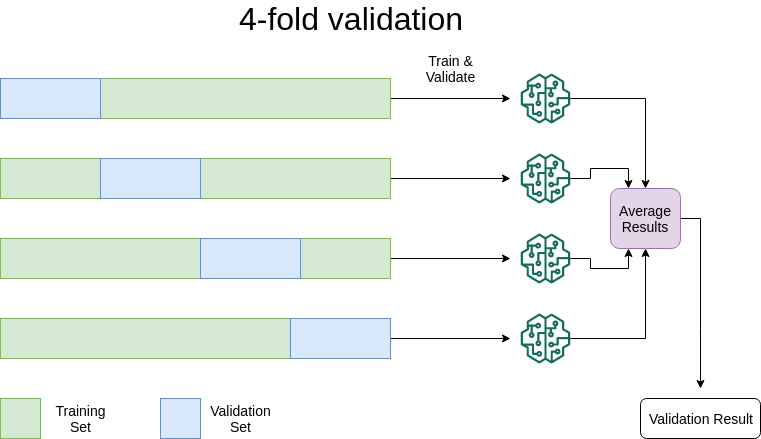

Sometimes you don't have enough data to perform proper training/validation. You run the risk of not being able to properly train the model or having unreliable validation depending on the way you perform the split. In these scenarios, the best thing is to set aside some data for the test set and perform k-fold cross-validation.

In k-fold cross-validation, you will select k different subsets of data as validation sets and train k models on the remaining data. After that, you will evaluate the performance of the models and average their results. This technique is especially useful if you don't have that much data available for training. The following is an example when k equals 4.

This has the advantage of offering you a better idea of the model's performance without needing lots of data. K-folds cross-validation is an extremely popular approach and usually works surprisingly well.

Knowing this stuff is important

In this article, we dealt with fundamental concepts for making a career in data science or machine learning. If you want to break into this field and start going to interviews, they'll likely ask you about validation/test sets.

Hopefully, after reading this article you'll be in much better shape to answer those questions.

Thank you for reading!

What to do next

- Share this article with friends and colleagues. Thank you for helping me reach people who might find this information useful.

- The folks at Neptune.ai wrote an article about a related topic: Hyperparameter tuning. Check out their article if you want a more hands-on approach on how to use what we just learned for improving the performance of your models.

- This article is based on Data Science for Business: What You Need to Know about Data Mining and Data-Analytic Thinking. This and other very helpful books can be found in the recommended reading list.

- Send me an email with questions, comments or suggestions (it's in the About Me page)